运行的这个脚本

from transformers import pipeline

def test_pipeline():

pipe = pipeline("text-generation", model="meta-llama/Llama-2-7b-hf", device_map="auto")

pipe.predict('中国的首都在哪里?')

遇到下面的错

D:\rgzn_source_code\ai_testing\venv\Scripts\python.exe "D:/dev_tool/JetBrains/PyCharm Community Edition 2023.2.1/plugins/python-ce/helpers/pycharm/_jb_pytest_runner.py" --target test_pipeline.py::test_pipeline

Testing started at 10:57 PM ...

Launching pytest with arguments test_pipeline.py::test_pipeline --no-header --no-summary -q in D:\rgzn_source_code\ai_testing\src\tests\llama

============================= test session starts =============================

collecting ... collected 1 item

test_pipeline.py::test_pipeline

============================= 1 failed in 10.87s ==============================

-------------------------------- live log call --------------------------------

D:\rgzn_source_code\ai_testing\venv\lib\site-packages\urllib3\connectionpool.py:1048 22:57:22 DEBUG - Starting new HTTPS connection (1): huggingface.co:443

D:\rgzn_source_code\ai_testing\venv\lib\site-packages\urllib3\connectionpool.py:546 22:57:24 DEBUG - https://huggingface.co:443 "HEAD /meta-llama/Llama-2-7b-hf/resolve/main/config.json HTTP/1.1" 403 0

FAILED

llama\test_pipeline.py:5 (test_pipeline)

response = <Response [403]>, endpoint_name = None

def hf_raise_for_status(response: Response, endpoint_name: Optional[str] = None) -> None:

"""

Internal version of `response.raise_for_status()` that will refine a

potential HTTPError. Raised exception will be an instance of `HfHubHTTPError`.

This helper is meant to be the unique method to raise_for_status when making a call

to the Hugging Face Hub.

Example:

```py

import requests

from huggingface_hub.utils import get_session, hf_raise_for_status, HfHubHTTPError

response = get_session().post(...)

try:

hf_raise_for_status(response)

except HfHubHTTPError as e:

print(str(e)) # formatted message

e.request_id, e.server_message # details returned by server

# Complete the error message with additional information once it's raised

e.append_to_message("\n`create_commit` expects the repository to exist.")

raise

```

Args:

response (`Response`):

Response from the server.

endpoint_name (`str`, *optional*):

Name of the endpoint that has been called. If provided, the error message

will be more complete.

<Tip warning={true}>

Raises when the request has failed:

- [`~utils.RepositoryNotFoundError`]

If the repository to download from cannot be found. This may be because it

doesn't exist, because `repo_type` is not set correctly, or because the repo

is `private` and you do not have access.

- [`~utils.GatedRepoError`]

If the repository exists but is gated and the user is not on the authorized

list.

- [`~utils.RevisionNotFoundError`]

If the repository exists but the revision couldn't be find.

- [`~utils.EntryNotFoundError`]

If the repository exists but the entry (e.g. the requested file) couldn't be

find.

- [`~utils.BadRequestError`]

If request failed with a HTTP 400 BadRequest error.

- [`~utils.HfHubHTTPError`]

If request failed for a reason not listed above.

</Tip>

"""

try:

> response.raise_for_status()

..\..\..\venv\lib\site-packages\huggingface_hub\utils\_errors.py:261:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <Response [403]>

def raise_for_status(self):

"""Raises :class:`HTTPError`, if one occurred."""

http_error_msg = ""

if isinstance(self.reason, bytes):

# We attempt to decode utf-8 first because some servers

# choose to localize their reason strings. If the string

# isn't utf-8, we fall back to iso-8859-1 for all other

# encodings. (See PR #3538)

try:

reason = self.reason.decode("utf-8")

except UnicodeDecodeError:

reason = self.reason.decode("iso-8859-1")

else:

reason = self.reason

if 400 <= self.status_code < 500:

http_error_msg = (

f"{self.status_code} Client Error: {reason} for url: {self.url}"

)

elif 500 <= self.status_code < 600:

http_error_msg = (

f"{self.status_code} Server Error: {reason} for url: {self.url}"

)

if http_error_msg:

> raise HTTPError(http_error_msg, response=self)

E requests.exceptions.HTTPError: 403 Client Error: Forbidden for url: https://huggingface.co/meta-llama/Llama-2-7b-hf/resolve/main/config.json

..\..\..\venv\lib\site-packages\requests\models.py:1021: HTTPError

The above exception was the direct cause of the following exception:

path_or_repo_id = 'meta-llama/Llama-2-7b-hf', filename = 'config.json'

cache_dir = 'C:\\Users\\z00498ta/.cache\\huggingface\\hub'

force_download = False, resume_download = False, proxies = None

use_auth_token = None, revision = None, local_files_only = False, subfolder = ''

user_agent = 'transformers/4.27.1; python/3.10.11; session_id/7b6c96c457fe426d88397cb36a644adc; torch/2.0.0+cu118; file_type/config; from_auto_class/True; using_pipeline/text-generation'

_raise_exceptions_for_missing_entries = True

_raise_exceptions_for_connection_errors = True, _commit_hash = None

def cached_file(

path_or_repo_id: Union[str, os.PathLike],

filename: str,

cache_dir: Optional[Union[str, os.PathLike]] = None,

force_download: bool = False,

resume_download: bool = False,

proxies: Optional[Dict[str, str]] = None,

use_auth_token: Optional[Union[bool, str]] = None,

revision: Optional[str] = None,

local_files_only: bool = False,

subfolder: str = "",

user_agent: Optional[Union[str, Dict[str, str]]] = None,

_raise_exceptions_for_missing_entries: bool = True,

_raise_exceptions_for_connection_errors: bool = True,

_commit_hash: Optional[str] = None,

):

"""

Tries to locate a file in a local folder and repo, downloads and cache it if necessary.

Args:

path_or_repo_id (`str` or `os.PathLike`):

This can be either:

- a string, the *model id* of a model repo on huggingface.co.

- a path to a *directory* potentially containing the file.

filename (`str`):

The name of the file to locate in `path_or_repo`.

cache_dir (`str` or `os.PathLike`, *optional*):

Path to a directory in which a downloaded pretrained model configuration should be cached if the standard

cache should not be used.

force_download (`bool`, *optional*, defaults to `False`):

Whether or not to force to (re-)download the configuration files and override the cached versions if they

exist.

resume_download (`bool`, *optional*, defaults to `False`):

Whether or not to delete incompletely received file. Attempts to resume the download if such a file exists.

proxies (`Dict[str, str]`, *optional*):

A dictionary of proxy servers to use by protocol or endpoint, e.g., `{'http': 'foo.bar:3128',

'http://hostname': 'foo.bar:4012'}.` The proxies are used on each request.

use_auth_token (`str` or *bool*, *optional*):

The token to use as HTTP bearer authorization for remote files. If `True`, will use the token generated

when running `huggingface-cli login` (stored in `~/.huggingface`).

revision (`str`, *optional*, defaults to `"main"`):

The specific model version to use. It can be a branch name, a tag name, or a commit id, since we use a

git-based system for storing models and other artifacts on huggingface.co, so `revision` can be any

identifier allowed by git.

local_files_only (`bool`, *optional*, defaults to `False`):

If `True`, will only try to load the tokenizer configuration from local files.

subfolder (`str`, *optional*, defaults to `""`):

In case the relevant files are located inside a subfolder of the model repo on huggingface.co, you can

specify the folder name here.

<Tip>

Passing `use_auth_token=True` is required when you want to use a private model.

</Tip>

Returns:

`Optional[str]`: Returns the resolved file (to the cache folder if downloaded from a repo).

Examples:

```python

# Download a model weight from the Hub and cache it.

model_weights_file = cached_file("bert-base-uncased", "pytorch_model.bin")

```"""

# Private arguments

# _raise_exceptions_for_missing_entries: if False, do not raise an exception for missing entries but return

# None.

# _raise_exceptions_for_connection_errors: if False, do not raise an exception for connection errors but return

# None.

# _commit_hash: passed when we are chaining several calls to various files (e.g. when loading a tokenizer or

# a pipeline). If files are cached for this commit hash, avoid calls to head and get from the cache.

if is_offline_mode() and not local_files_only:

logger.info("Offline mode: forcing local_files_only=True")

local_files_only = True

if subfolder is None:

subfolder = ""

path_or_repo_id = str(path_or_repo_id)

full_filename = os.path.join(subfolder, filename)

if os.path.isdir(path_or_repo_id):

resolved_file = os.path.join(os.path.join(path_or_repo_id, subfolder), filename)

if not os.path.isfile(resolved_file):

if _raise_exceptions_for_missing_entries:

raise EnvironmentError(

f"{path_or_repo_id} does not appear to have a file named {full_filename}. Checkout "

f"'https://huggingface.co/{path_or_repo_id}/{revision}' for available files."

)

else:

return None

return resolved_file

if cache_dir is None:

cache_dir = TRANSFORMERS_CACHE

if isinstance(cache_dir, Path):

cache_dir = str(cache_dir)

if _commit_hash is not None and not force_download:

# If the file is cached under that commit hash, we return it directly.

resolved_file = try_to_load_from_cache(

path_or_repo_id, full_filename, cache_dir=cache_dir, revision=_commit_hash

)

if resolved_file is not None:

if resolved_file is not _CACHED_NO_EXIST:

return resolved_file

elif not _raise_exceptions_for_missing_entries:

return None

else:

raise EnvironmentError(f"Could not locate {full_filename} inside {path_or_repo_id}.")

user_agent = http_user_agent(user_agent)

try:

# Load from URL or cache if already cached

> resolved_file = hf_hub_download(

path_or_repo_id,

filename,

subfolder=None if len(subfolder) == 0 else subfolder,

revision=revision,

cache_dir=cache_dir,

user_agent=user_agent,

force_download=force_download,

proxies=proxies,

resume_download=resume_download,

use_auth_token=use_auth_token,

local_files_only=local_files_only,

)

..\..\..\venv\lib\site-packages\transformers\utils\hub.py:409:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

..\..\..\venv\lib\site-packages\huggingface_hub\utils\_validators.py:118: in _inner_fn

return fn(*args, **kwargs)

..\..\..\venv\lib\site-packages\huggingface_hub\file_download.py:1344: in hf_hub_download

raise head_call_error

..\..\..\venv\lib\site-packages\huggingface_hub\file_download.py:1230: in hf_hub_download

metadata = get_hf_file_metadata(

..\..\..\venv\lib\site-packages\huggingface_hub\utils\_validators.py:118: in _inner_fn

return fn(*args, **kwargs)

..\..\..\venv\lib\site-packages\huggingface_hub\file_download.py:1606: in get_hf_file_metadata

hf_raise_for_status(r)

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

response = <Response [403]>, endpoint_name = None

def hf_raise_for_status(response: Response, endpoint_name: Optional[str] = None) -> None:

"""

Internal version of `response.raise_for_status()` that will refine a

potential HTTPError. Raised exception will be an instance of `HfHubHTTPError`.

This helper is meant to be the unique method to raise_for_status when making a call

to the Hugging Face Hub.

Example:

```py

import requests

from huggingface_hub.utils import get_session, hf_raise_for_status, HfHubHTTPError

response = get_session().post(...)

try:

hf_raise_for_status(response)

except HfHubHTTPError as e:

print(str(e)) # formatted message

e.request_id, e.server_message # details returned by server

# Complete the error message with additional information once it's raised

e.append_to_message("\n`create_commit` expects the repository to exist.")

raise

```

Args:

response (`Response`):

Response from the server.

endpoint_name (`str`, *optional*):

Name of the endpoint that has been called. If provided, the error message

will be more complete.

<Tip warning={true}>

Raises when the request has failed:

- [`~utils.RepositoryNotFoundError`]

If the repository to download from cannot be found. This may be because it

doesn't exist, because `repo_type` is not set correctly, or because the repo

is `private` and you do not have access.

- [`~utils.GatedRepoError`]

If the repository exists but is gated and the user is not on the authorized

list.

- [`~utils.RevisionNotFoundError`]

If the repository exists but the revision couldn't be find.

- [`~utils.EntryNotFoundError`]

If the repository exists but the entry (e.g. the requested file) couldn't be

find.

- [`~utils.BadRequestError`]

If request failed with a HTTP 400 BadRequest error.

- [`~utils.HfHubHTTPError`]

If request failed for a reason not listed above.

</Tip>

"""

try:

response.raise_for_status()

except HTTPError as e:

error_code = response.headers.get("X-Error-Code")

if error_code == "RevisionNotFound":

message = f"{response.status_code} Client Error." + "\n\n" + f"Revision Not Found for url: {response.url}."

raise RevisionNotFoundError(message, response) from e

elif error_code == "EntryNotFound":

message = f"{response.status_code} Client Error." + "\n\n" + f"Entry Not Found for url: {response.url}."

raise EntryNotFoundError(message, response) from e

elif error_code == "GatedRepo":

message = (

f"{response.status_code} Client Error." + "\n\n" + f"Cannot access gated repo for url {response.url}."

)

> raise GatedRepoError(message, response) from e

E huggingface_hub.utils._errors.GatedRepoError: 403 Client Error. (Request ID: Root=1-651eced4-228bb4036055ff93004b7956;7573f3a0-4395-41fc-b4f6-91b8fde68ed5)

E

E Cannot access gated repo for url https://huggingface.co/meta-llama/Llama-2-7b-hf/resolve/main/config.json.

E Your request to access model meta-llama/Llama-2-7b-hf is awaiting a review from the repo authors.

..\..\..\venv\lib\site-packages\huggingface_hub\utils\_errors.py:277: GatedRepoError

During handling of the above exception, another exception occurred:

def test_pipeline():

> pipe = pipeline("text-generation", model="meta-llama/Llama-2-7b-hf", device_map="auto")

test_pipeline.py:7:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

..\..\..\venv\lib\site-packages\transformers\pipelines\__init__.py:692: in pipeline

config = AutoConfig.from_pretrained(model, _from_pipeline=task, **hub_kwargs, **model_kwargs)

..\..\..\venv\lib\site-packages\transformers\models\auto\configuration_auto.py:896: in from_pretrained

config_dict, unused_kwargs = PretrainedConfig.get_config_dict(pretrained_model_name_or_path, **kwargs)

..\..\..\venv\lib\site-packages\transformers\configuration_utils.py:573: in get_config_dict

config_dict, kwargs = cls._get_config_dict(pretrained_model_name_or_path, **kwargs)

..\..\..\venv\lib\site-packages\transformers\configuration_utils.py:628: in _get_config_dict

resolved_config_file = cached_file(

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

path_or_repo_id = 'meta-llama/Llama-2-7b-hf', filename = 'config.json'

cache_dir = 'C:\\Users\\z00498ta/.cache\\huggingface\\hub'

force_download = False, resume_download = False, proxies = None

use_auth_token = None, revision = None, local_files_only = False, subfolder = ''

user_agent = 'transformers/4.27.1; python/3.10.11; session_id/7b6c96c457fe426d88397cb36a644adc; torch/2.0.0+cu118; file_type/config; from_auto_class/True; using_pipeline/text-generation'

_raise_exceptions_for_missing_entries = True

_raise_exceptions_for_connection_errors = True, _commit_hash = None

def cached_file(

path_or_repo_id: Union[str, os.PathLike],

filename: str,

cache_dir: Optional[Union[str, os.PathLike]] = None,

force_download: bool = False,

resume_download: bool = False,

proxies: Optional[Dict[str, str]] = None,

use_auth_token: Optional[Union[bool, str]] = None,

revision: Optional[str] = None,

local_files_only: bool = False,

subfolder: str = "",

user_agent: Optional[Union[str, Dict[str, str]]] = None,

_raise_exceptions_for_missing_entries: bool = True,

_raise_exceptions_for_connection_errors: bool = True,

_commit_hash: Optional[str] = None,

):

"""

Tries to locate a file in a local folder and repo, downloads and cache it if necessary.

Args:

path_or_repo_id (`str` or `os.PathLike`):

This can be either:

- a string, the *model id* of a model repo on huggingface.co.

- a path to a *directory* potentially containing the file.

filename (`str`):

The name of the file to locate in `path_or_repo`.

cache_dir (`str` or `os.PathLike`, *optional*):

Path to a directory in which a downloaded pretrained model configuration should be cached if the standard

cache should not be used.

force_download (`bool`, *optional*, defaults to `False`):

Whether or not to force to (re-)download the configuration files and override the cached versions if they

exist.

resume_download (`bool`, *optional*, defaults to `False`):

Whether or not to delete incompletely received file. Attempts to resume the download if such a file exists.

proxies (`Dict[str, str]`, *optional*):

A dictionary of proxy servers to use by protocol or endpoint, e.g., `{'http': 'foo.bar:3128',

'http://hostname': 'foo.bar:4012'}.` The proxies are used on each request.

use_auth_token (`str` or *bool*, *optional*):

The token to use as HTTP bearer authorization for remote files. If `True`, will use the token generated

when running `huggingface-cli login` (stored in `~/.huggingface`).

revision (`str`, *optional*, defaults to `"main"`):

The specific model version to use. It can be a branch name, a tag name, or a commit id, since we use a

git-based system for storing models and other artifacts on huggingface.co, so `revision` can be any

identifier allowed by git.

local_files_only (`bool`, *optional*, defaults to `False`):

If `True`, will only try to load the tokenizer configuration from local files.

subfolder (`str`, *optional*, defaults to `""`):

In case the relevant files are located inside a subfolder of the model repo on huggingface.co, you can

specify the folder name here.

<Tip>

Passing `use_auth_token=True` is required when you want to use a private model.

</Tip>

Returns:

`Optional[str]`: Returns the resolved file (to the cache folder if downloaded from a repo).

Examples:

```python

# Download a model weight from the Hub and cache it.

model_weights_file = cached_file("bert-base-uncased", "pytorch_model.bin")

```"""

# Private arguments

# _raise_exceptions_for_missing_entries: if False, do not raise an exception for missing entries but return

# None.

# _raise_exceptions_for_connection_errors: if False, do not raise an exception for connection errors but return

# None.

# _commit_hash: passed when we are chaining several calls to various files (e.g. when loading a tokenizer or

# a pipeline). If files are cached for this commit hash, avoid calls to head and get from the cache.

if is_offline_mode() and not local_files_only:

logger.info("Offline mode: forcing local_files_only=True")

local_files_only = True

if subfolder is None:

subfolder = ""

path_or_repo_id = str(path_or_repo_id)

full_filename = os.path.join(subfolder, filename)

if os.path.isdir(path_or_repo_id):

resolved_file = os.path.join(os.path.join(path_or_repo_id, subfolder), filename)

if not os.path.isfile(resolved_file):

if _raise_exceptions_for_missing_entries:

raise EnvironmentError(

f"{path_or_repo_id} does not appear to have a file named {full_filename}. Checkout "

f"'https://huggingface.co/{path_or_repo_id}/{revision}' for available files."

)

else:

return None

return resolved_file

if cache_dir is None:

cache_dir = TRANSFORMERS_CACHE

if isinstance(cache_dir, Path):

cache_dir = str(cache_dir)

if _commit_hash is not None and not force_download:

# If the file is cached under that commit hash, we return it directly.

resolved_file = try_to_load_from_cache(

path_or_repo_id, full_filename, cache_dir=cache_dir, revision=_commit_hash

)

if resolved_file is not None:

if resolved_file is not _CACHED_NO_EXIST:

return resolved_file

elif not _raise_exceptions_for_missing_entries:

return None

else:

raise EnvironmentError(f"Could not locate {full_filename} inside {path_or_repo_id}.")

user_agent = http_user_agent(user_agent)

try:

# Load from URL or cache if already cached

resolved_file = hf_hub_download(

path_or_repo_id,

filename,

subfolder=None if len(subfolder) == 0 else subfolder,

revision=revision,

cache_dir=cache_dir,

user_agent=user_agent,

force_download=force_download,

proxies=proxies,

resume_download=resume_download,

use_auth_token=use_auth_token,

local_files_only=local_files_only,

)

except RepositoryNotFoundError:

> raise EnvironmentError(

f"{path_or_repo_id} is not a local folder and is not a valid model identifier "

"listed on 'https://huggingface.co/models'\nIf this is a private repository, make sure to "

"pass a token having permission to this repo with `use_auth_token` or log in with "

"`huggingface-cli login` and pass `use_auth_token=True`."

)

E OSError: meta-llama/Llama-2-7b-hf is not a local folder and is not a valid model identifier listed on 'https://huggingface.co/models'

E If this is a private repository, make sure to pass a token having permission to this repo with `use_auth_token` or log in with `huggingface-cli login` and pass `use_auth_token=True`.

..\..\..\venv\lib\site-packages\transformers\utils\hub.py:424: OSError

Process finished with exit code 1

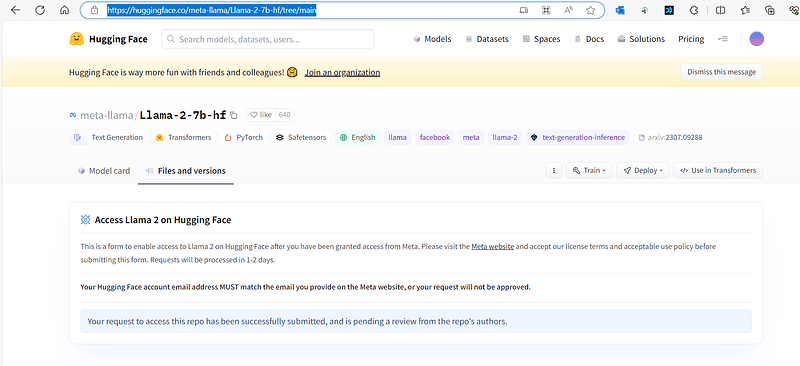

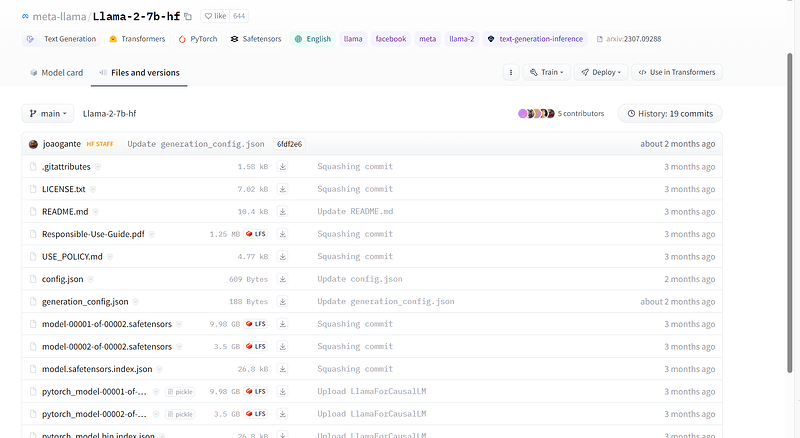

出错后,尝试访问hugging-face网站 meta-llama/Llama-2-7b-hf at main (huggingface.co),点Files and Versions访问不了,现在是需要等授权才能下载吗?怀疑上面的错是这个导致的 吗?

想排除错误,但是不清楚错误提示的做法要怎么传参数呢(use_auth_token=True 这个加到代码的哪里呢?),本地是可以执行huggingface-cli login的

C:\Users\z00498ta>huggingface-cli login

_| _| _| _| _|_|_| _|_|_| _|_|_| _| _| _|_|_| _|_|_|_| _|_| _|_|_| _|_|_|_|

_| _| _| _| _| _| _| _|_| _| _| _| _| _| _| _|

_|_|_|_| _| _| _| _|_| _| _|_| _| _| _| _| _| _|_| _|_|_| _|_|_|_| _| _|_|_|

_| _| _| _| _| _| _| _| _| _| _|_| _| _| _| _| _| _| _|

_| _| _|_| _|_|_| _|_|_| _|_|_| _| _| _|_|_| _| _| _| _|_|_| _|_|_|_|

To login, `huggingface_hub` requires a token generated from https://huggingface.co/settings/tokens .

Token can be pasted using 'Right-Click'.

Token:

Add token as git credential? (Y/n) Y

Token is valid (permission: write).

Your token has been saved in your configured git credential helpers (manager-core).

Your token has been saved to C:\Users\z00498ta\.cache\huggingface\token

Login successful