因为这个文章比较好,转到测试人社区内方便大家学习参考。原始链接 https://testing.googleblog.com/2020/11/fixing-test-hourglass.html

Fixing a Test Hourglass

Monday, November 09, 2020

By Alan Myrvold

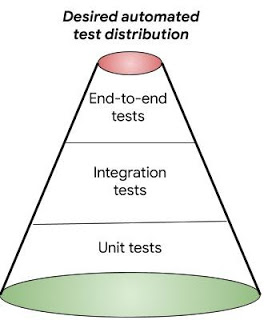

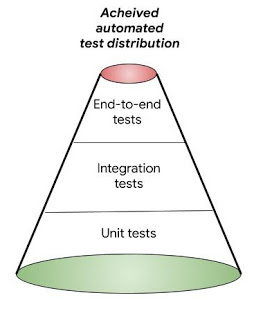

Automated tests make it safer and faster to create new features, fix bugs, and refactor code. When planning the automated tests, we envision a pyramid with a strong foundation of small unit tests, some well designed integration tests, and a few large end-to-end tests. From Just Say No to More End-to-End Tests, tests should be fast, reliable, and specific; end-to-end tests, however, are often slow, unreliable, and difficult to debug.

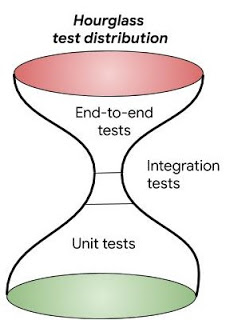

As software projects grow, often the shape of our test distribution becomes undesirable, either top heavy (no unit or medium integration tests), or like an hourglass.

The hourglass test distribution has a large set of unit tests, a large set of end-to-end tests, and few or no medium integration tests.

To transform the hourglass back into a pyramid — so that you can test the integration of components in a reliable, sustainable way — you need to figure out how to architect the system under test and test infrastructure and make system testability improvements and test-code improvements.

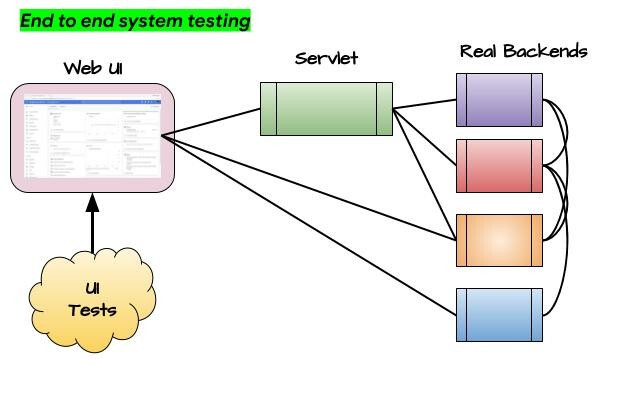

I worked on a project with a web UI, a server, and many backends. There were unit tests at all levels with good coverage and a quickly increasing set of end-to-end tests.

The end-to-end tests found issues that the unit tests missed, but they ran slowly, and environmental issues caused spurious failures, including test data corruption. In addition, some functional areas were difficult to test because they covered more than the unit but required state within the system that was hard to set up.

We eventually found a good test architecture for faster, more reliable integration tests, but with some missteps along the way.

An example UI-level end-to-end test, written in protractor, looked something like this:

describe('Terms of service are handled', () => {

it('accepts terms of service', async () => {

const user = getUser('termsNotAccepted');

await login(user);

await see(termsOfServiceDialog());

await click('Accept')

await logoff();

await login(user);

await not.see(termsOfServiceDialog());

});

});

This test logs on as a user, sees the terms of service dialog that the user needs to accept, accepts it, then logs off and logs back on to ensure the user is not prompted again.

This terms of service test was a challenge to run reliably, because once an agreement was accepted, the backend server had no RPC method to reverse the operation and “un-accept” the TOS. We could create a new user with each test, but that was time consuming and hard to clean up.

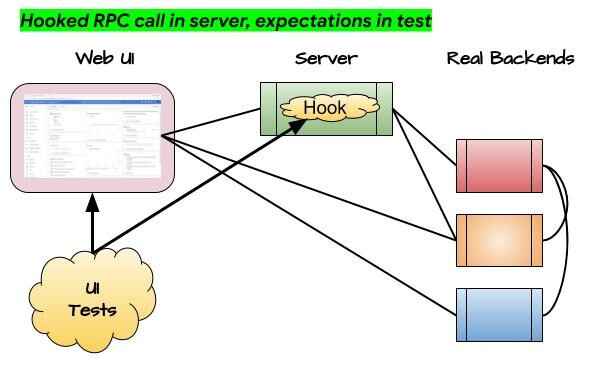

The first attempt to make the terms of service feature testable without end-to-end testing was to hook the server RPC method and set the expectations within the test. The hook intercepts the RPC call and provides expected results instead of calling the backend API.

This approach worked. The test interacted with the backend RPC without really calling it, but it cluttered the test with extra logic.

describe('Terms of service are handled', () => {

it('accepts terms of service', async () => {

const user = getUser('someUser');

await hook('TermsOfService.Get()', true);

await login(user);

await see(termsOfServiceDialog());

await click('Accept')

await logoff();

await hook('TermsOfService.Get()', false);

await login(user);

await not.see(termsOfServiceDialog());

});

});

The test met the goal of testing the integration of the web UI and server, but it was unreliable. As the system scaled under load, there were several server processes and no guarantee that the UI would access the same server for all RPC calls, so the hook might be set in one server process and the UI accessed in another.

The hook also wasn’t at a natural system boundary, which made it require more maintenance as the system evolved and code was refactored.

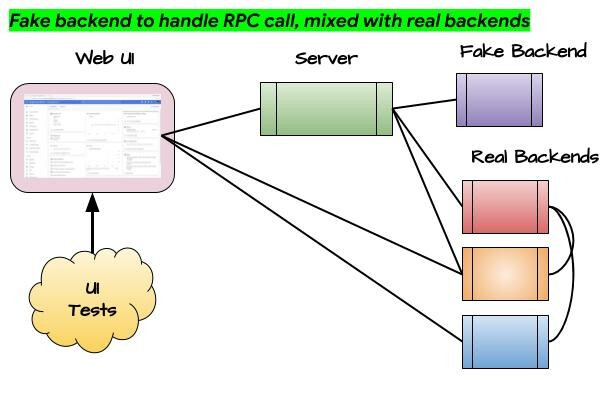

The next design of the test architecture was to fake the backend that eventually processes the terms of service call.

The fake implementation can be quite simple:

public class FakeTermsOfService implements TermsOfService.Service {

private static final Map<String, Boolean> accepted = new ConcurrentHashMap<>();

@Override

public TosGetResponse get(TosGetRequest req) {

return accepted.getOrDefault(req.UserID(), Boolean.FALSE);

}

@Override

public void accept(TosAcceptRequest req) {

accepted.put(req.UserID(), Boolean.TRUE);

}

}

And the test is now uncluttered by the expectations:

describe('Terms of service are handled', () => {

it('accepts terms of service', async () => {

const user = getUser('termsNotAccepted');

await login(user);

await see(termsOfServiceDialog());

await click('Accept')

await logoff();

await login(user);

await not.see(termsOfServiceDialog());

});

});

Because the fake stores the accepted state in memory, there is no need to reset the state for the next test iteration; it is enough just to restart the fake server.

This worked but was problematic when there was a mix of fake and real backends. This was because there was state between the real backends that was now out of sync with the fake backend.

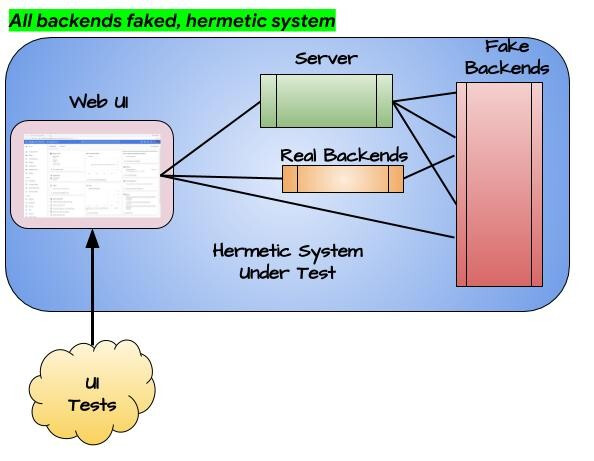

Our final, successful integration test architecture was to provide fake implementations for all except one of the backends, all sharing the same in-memory state. One real backend was included in the system under test because it was tightly coupled with the Web UI. Its dependencies were all wired to fake backends. These are integration tests over the entire system under test, but they remove the backend dependencies. These tests expand the medium size tests in the test hourglass, allowing us to have fewer end-to-end tests with real backends.

Note that these integration tests are not only the option. For logic in the Web UI, we can write page level unit tests, which allow the tests to run faster and more reliably. For the terms of service feature, however, we want to test the Web UI and server logic together, so integration tests are a good solution.

This resulted in UI tests that ran, unmodified, on both the real and fake backend systems.

When run with fake backends the tests were faster and more reliable. This made it easier to add test scenarios that would have been more challenging to set up with the real backends. We also deleted end-to-end tests that were well duplicated by the integration tests, resulting in more integration tests than end-to-end tests.

By iterating, we arrived at a sustainable test architecture for the integration tests.

If you’re facing a test hourglass the test architecture to devise medium tests may not be obvious. I’d recommend experimenting, dividing the system on well defined interfaces, and making sure the new tests are providing value by running faster and more reliably or by unlocking hard to test areas.

References

- Just Say No to More End-to-End Tests, Mike Wacker, https://testing.googleblog.com/2015/04/just-say-no-to-more-end-to-end-tests.html

- Test Pyramid & Antipatterns, Khushi, https://khushiy.com/2019/02/07/test-pyramid-antipatterns/

- Testing on the Toilet: Fake Your Way to Better Tests, Jonathan Rockway and Andrew Trenk, https://testing.googleblog.com/2013/06/testing-on-toilet-fake-your-way-to.html

- Testing on the Toilet: Know Your Test Doubles, Andrew Trenk, https://testing.googleblog.com/2013/07/testing-on-toilet-know-your-test-doubles.html

- Hermetic Servers, Chaitali Narla and Diego Salas, https://testing.googleblog.com/2012/10/hermetic-servers.html

- Software Engineering at Google, Titus Winters, Tom Manshreck, Hyrum Wright, Software Engineering at Google [Book]