less nginx.log | awk ‘$10’|sort -n|awk ‘NR<=3{print $1}’

find_top_3(){

sort nginx.log | uniq -c | sort -nr -t ’ ’ -k 1 | head -n 3

}

less nginx.log | awk ‘{sum+=$(NF-1)}END{print "Average = ", sum/NR}’

find_error_log() {

less nginx.log | awk -F " " '{print $1}' | uniq -c | sort -r | head -3

}

cat nginx.log |awk -F " " ‘{print $1}’ |uniq -c |sort -nr |head -n 3

awk ‘{print $1}’ nginx.log |sort |uniq -c|sort -nr|sed -n “1,3p”

less nginx.log | awk '$7 == "/"' | awk '{ sum += $(NF-1)} END {print sum/NR}'

url_avg_time() {

awk '$7~/^\/$/' nginx.log | awk 'BEGIN{$i=$14}{$sum+=$i}END{print $sum/NR}'

}

url_avg_time() {

less nginx.log | awk '{sum+=$(NF-1)}END{print "Average = ", sum/NR}'

}

less nginx.log |awk ‘$7=="/"{sum += $(NF-1);} END {print "average = "sum/NR}’

忘了筛选首页了。。。

less nginx.log | awk '{print $1}'

课后作业

- 请求数量

url_summary(){

less nginx.log | awk '{print $7}' | sed -E "s@/topics/[0-9]+@/topics/int@g" | sed -E "s@replies/[0-9]+@replies/int@g" | sed -E "s@/2018/[0-9a-z\-]+@/2018/id@g" | sed -E "s@/2017/[0-9a-z\-]+@/2017/id@g" | sed -E "s@/2016/[0-9a-z\-]+@/2016/id@g" | sed -E "s@/2015/[0-9a-z\-]+@/2015/id@g" | sed -E "s@/2014/[0-9a-z\-]+@/2014/id@g" | sed -E "s@/avatar/[0-9]+@/avatar/int@g" | sed -E "s@/avatar/int/[0-9a-z]+@/avatar/int/id@g" | sort | uniq -c

}

- 前十排序

url_summary(){

less nginx.log | awk '{print $7}' | sed -E "s@/topics/[0-9]+@/topics/int@g" | sed -E "s@replies/[0-9]+@replies/int@g" | sed -E "s@/2018/[0-9a-z\-]+@/2018/id@g" | sed -E "s@/2017/[0-9a-z\-]+@/2017/id@g" | sed -E "s@/2016/[0-9a-z\-]+@/2016/id@g" | sed -E "s@/2015/[0-9a-z\-]+@/2015/id@g" | sed -E "s@/2014/[0-9a-z\-]+@/2014/id@g" | sed -E "s@/avatar/[0-9]+@/avatar/int@g" | sed -E "s@/avatar/int/[0-9a-z]+@/avatar/int/id@g" | sort | uniq -c | sort -rn | head -10

}

cat nginx.log | awk ‘{print $1" “$7” "$9}’|sort -n|uniq -c |sort -n -r | head -10

- 找出log中的404,500的报错

find_error_log(){

less nginx.log | awk '$9~/404|500/'

}

2.找出访问量最高的ip, 统计分析,取出top3的ip和数量

find_top_3(){

less nginx.log | awk '{print $1}' | sort | uniq -c | sort -nr | head -n 3

}

3.找出首页访问的平均相应时间

url_avg_time(){

less nginx.log | awk '$7 == "/"'| awk 'BEGIN{avg=0; print "avg:"}{print $(NF-1)"+";avg+=$(NF-1)} END{print avg/NR}'

}

课后作业:

找出访问量最高的页面地址 借助于sed的统计分析

/topics/16689/replies/124751/edit 把数字替换为 /topics/int/replies/int/edit

/_img/uploads/photo/2018/c54755ee-6bfd-489a-8a39-81a1d7551cbd.png!large 变成 /_img

/uploads/photo/2018/id.png!large

/topics/9497 改成 /topics/int

其他规则参考如上

输出

url pattern对应的请求数量

取出top 10请求量的url pattern

类似

288 url1

3000 url2

把数字替换成int

/topics/16689/replies/124751/edit 把数字替换为 /topics/int/replies/int/edit

/topics/9497 改成 /topics/int

less nginx.log | awk '{print $7}' | sed -E 's#([0-9]+)#int#g'

url pattern对应的请求数量

取出top 10请求量的url pattern

url_pattern(){

less nginx.log | awk '{print $7}' | sort | uniq -c | sort -nr | head -10

}

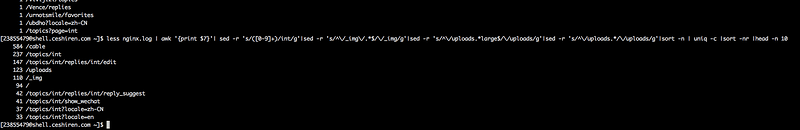

url_topten() {

less nginx.log | awk '{print $7}'| sed -r 's/([0-9]+)/int/g'|sed -r 's/^\/_img\/.*$/\/_img/g'|sed -r 's/^\/uploads.*large$/\/uploads/g'|sed -r 's/^\/uploads.*/\/uploads/g'|sort -n | uniq -c |sort -nr |head -n 10

}

cat nginx.log | awk ‘{print $7}’ | sed -e ‘s#[0-9]+/#test/#g’ \

-e ‘s#[?|!].*##g’ \

-e ‘s#[0-9]+$#test#g’ \

-e ‘s#[0-9a-z-]*.png#.png#g’ \

-e ‘s#[0-9a-z-]*.jpg#.jpg#g’ \

-e ‘s#[0-9a-z-]*.gif#.gif#g’ \

-e ‘s#[0-9a-z-]*.jpeg#.jpeg#g’ \

-e ‘s#[a-zA-Z0-9%&$@_]{15,}#:string:#g’ \

-e ‘s#^/[0-9a-zA-Z]*$#/user#g’ \

-e ‘s#%21large##g’ \

-e ‘s#/cable#/cable===#g’ \

-e ‘s#/hogwarts#/hogwarts===#g’ |

sed -E ‘s#[a-zA-Z0-9%&$@_]{20,}#:string:#g’ | sort | uniq -c | sort -nr | head -10

url_summary(){

awk '{print $7}' nginx.log | sed 's/?.*$//' | sed 's/\/[0-9]\+/\/int/g' | sed 's/\/[0-9a-z-]\+\./\/id\./' | sort | uniq -c | sort -nr | head -10

}

本来这个应该够用了。。。但是匹配出来会有一些id.rar id.tar.gz id.json 这些按理说应该不能转成id,查了半天想在后面加(?!json)排除json,但是sed里面这个不成功,python里面用/[0-9a-z-]+\\.(?!json)是能成功匹配图片id类型排除掉.json的,不知道为啥。。

less nginx.log | awk '{print $7}' \

| sed "s@/topics/[0-9]*@/topics/int@g" \

| sed "s@/replies/[0-9]*@/replies/int@g" \

| sed "s@/2018/[a-z0-9\-]*.@/2018/id.@g" \

| sed "s@/2017/[a-z0-9\-]*.@/2017/id.@g" \

| sed "s@/2016/[a-z0-9]*.@/2016/id.@g" \

| sed "s@/2015/[a-z0-9]*.@/2015/id.@g"\

| sed "s@/2014/[a-z0-9]*.@/2014/id.@g" \

| sed "s@/avatar/[0-9]*@/avatar/int@g" \

| sed "s@/avatar/int/[0-9a-z]*.@/avatar/int/id.@g" \

| sort | uniq -c | sort -nr |head -n 10